Writing on software, systems, and hard-won lessons.

Writing on developer experience, systems thinking, and the mistakes behind both - covering AI workflows, continuous improvement, and the mental models that drive better decisions.

What is claude insights: The /insights command in Claude Code generates an HTML report analysing your usage patterns across all your Claude Code sessions. It's designed to help us understand how we interact with Claude, what's working well, and how to improve our workflows.

Claude insights uses local cache data from your machine, not your entire Claude account history. Since I created a new WSL environment specifically for coding this blog, mine only covers the past 28 days: 64 sessions where Claude and I changed 612 files (+48k/-15k lines) across 586 messages. If you've been using Claude Code for months on the same machine, your report will cover a longer time frame.

From the insights report:

Your 106 hours across 64 sessions reveal a power user pushing Claude Code hard on full-stack bug fixing and feature delivery, but with significant friction from wrong approaches and buggy code that autonomous, test-driven workflows could dramatically reduce.

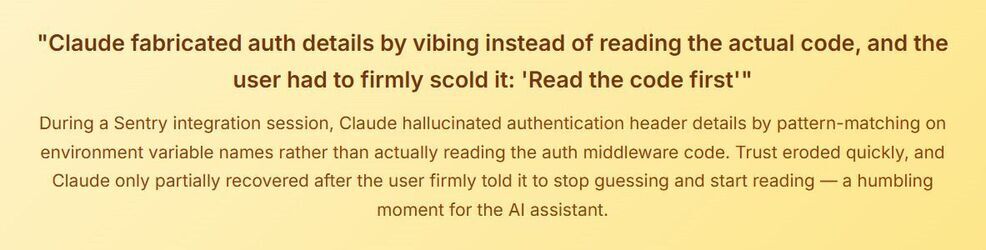

What the report says is hindering: Claude too often latches onto the wrong root cause during debugging (CSP vs CORS, Amplify routing misdiagnoses, fabricated auth details) and delivers features missing critical pieces like DB constraints, nav entries, or validation. Forcing me to find gaps while manually testing.

Below are the practical improvements I made to my AI Workflow (claude.md, prompts, skills, hooks) based on the insights report. None of this prevents Claude from being wrong. It just makes the wrongness faster to catch and cheaper to fix.

"The user had to firmly scold it" was the report ratting me out for yelling at Claude.

These go in your project root. Claude Code reads this file before every session.

This will help avoid hallucinations like made-up auth header details or misdiagnosed CORS issues.

When debugging production issues, ALWAYS read the actual code and configuration files before proposing a fix. Never assume auth flows, API patterns, or infrastructure config based on env var names or conventions. Trace the actual code path first.

Claude shipped features as large, untested commits without validating end-to-end integration, leading to cascading fix-up commits for missing migrations, broken deployment config, and confused API contracts.

After implementing any feature, verify completeness by checking: 1) database constraints/migrations, 2) backend validation, 3) API spec/types, 4) navigation/menu entries, 5) settings/toggles, 6) tests. Do NOT consider a feature done until all layers are covered.

I'll give Claude something closer to 5 seconds, just enough to catch flaws without killing my flow. The full preflight suite can wait until commit time.

Always run preflight checks (formatting, linting, tests) BEFORE reporting that a task is complete. Fix any prettier/lint/test failures before declaring success.

This should help avoid Claude re-enabling nonce validation in the Go backend but never checking whether the frontend could actually send one. Production login broke because the backend started demanding a nonce that the frontend had no code to fetch. The backend change should have come with "does the frontend handle this?"

This is a full-stack platform with a Go backend, Next.js/TypeScript frontend, SQL migrations, Terraform infrastructure, and AWS Amplify deployments. When making changes, consider the impact across all layers. The primary languages are TypeScript and Go.

For efficiency, I don't want claude to always do deep passes. But when debugging or reviewing code, surface-level findings are often wrong or incomplete. This prompt makes "look deeper" the default.

When asked to review code or investigate bugs, do a DEEP first pass. Don't produce surface-level findings. Check for false positives before reporting - verify each finding by reading surrounding code. If the user says 'look deeper', treat it as a signal the first pass was insufficient.

Most of the time Claude would always check copilot or other ai suggestions. But twice in the past month Claude applied Copilot suggestions which were false positives and caused bugs.

When evaluating Copilot PR review comments or external code review suggestions, do NOT blindly apply them. Investigate each suggestion against the actual codebase first. Some suggestions cause breaking changes.

Skills are reusable commands. Create .claude/skills/<n>/SKILL.md in your project.

# Code Review Skill

1. Read ALL changed files thoroughly before commenting

2. For each finding, verify it's real by reading surrounding code

3. Check for false positives - do NOT report speculative issues

4. Categorize as critical/non-critical

5. For external review comments (Copilot etc), investigate before applying

# Preflight Check Skill

1. Run `go vet ./...` and `go build ./...`

2. Run `npx tsc --noEmit`

3. Run `npx prettier --check .`

4. Run the full test suite

5. Report any failures and fix them before declaring complete

Create .claude/settings.json in your project root, and the hooks will run automatically after Claude makes any changes.

Adding this to the config will run prettier after every file edit, and go type check after every response:

{

"hooks": {

"postToolUse": [

{

"matcher": "Edit|Write",

"command": "npx prettier --write $CLAUDE_FILE_PATH 2>/dev/null; echo 'formatted'"

}

],

"postResponse": [

{

"command": "go vet ./... 2>&1 | head -5; npx tsc --noEmit 2>&1 | head -5"

}

]

}

}

From the insights report:

Multiple sessions show a pattern where Claude jumps to fixes before understanding root causes - misdiagnosing CORS vs CSP, missing Terraform config issues, applying unnecessary rewrites. Your most successful debugging sessions were when Claude traced the actual code path. Starting with 'investigate only, don't change anything yet' would prevent the wrong-approach friction that appeared in 31 sessions.

Investigate this production issue: [describe symptom]. Read the actual code paths, check infrastructure config (Terraform, Amplify), and trace the request flow. Do NOT make any changes yet - just report your findings with evidence from the code.

From the insights report:

Your book reviews, mental models, likes/views, and page toggle features all suffered from incomplete implementations — missing DB constraints, nav entries, settings toggles, or validation. Giving Claude an explicit checklist upfront would prevent the pattern of you discovering missing pieces after the fact. This is especially important for your full-stack Go + Next.js + SQL architecture.

Implement [feature]. Before marking done, verify ALL of these are covered: 1) SQL migration with proper constraints, 2) Go backend handlers + validation, 3) API spec/OpenAPI updates, 4) TypeScript types, 5) Frontend pages + components, 6) Navigation menu entries, 7) Settings toggles if applicable, 8) Tests passing, 9) Preflight checks clean.

From the insights report:

You frequently triage Copilot PR feedback (6+ sessions of code review triage). When Claude investigates first, it correctly dismisses false positives and you agree. When it blindly applies suggestions, it causes breaking changes. Being explicit about 'investigate then propose' vs 'just apply' saves significant rollback time. Your best triage sessions had Claude disagree with Copilot with clear reasoning.

Review these Copilot PR comments one by one. For EACH comment: 1) Read the actual code being discussed, 2) Determine if the suggestion is valid or a false positive, 3) Explain your reasoning, 4) Only make the change if you're confident it's correct and won't break anything. Do NOT blindly apply suggestions.

From the insights report:

Getting started: Use Claude Code's headless mode or the Task tool (you've already used it 63 times) to spawn sub-agents that run tests in a loop. Combine with your existing preflight checks so Claude must pass all gates before presenting results

Fix this bug: [describe]. Before presenting your solution, you MUST: 1) Write or identify the tests that reproduce it, 2) Run the full test suite, 3) If tests fail, fix your code, 4) Repeat until ALL tests pass including preflight checks, 5) Only then present your changes. Do NOT ask me to verify intermediate steps - iterate autonomously until green.

From the insights report:

Getting started: Use the Task tool to spawn specialized sub-agents for each layer (backend, frontend, database), giving each the full context of the feature spec. Have the parent agent coordinate integration and run end-to-end validation.

Add a new content type called [name] to my platform (Go backend, Next.js frontend, PostgreSQL). Use the Task tool to work in parallel: Task 1: SQL migration, sqlc queries, seed data. Task 2: Go API handlers, routes, OpenAPI spec. Task 3: Next.js admin pages and public pages. Task 4: Settings toggles, nav entries, sitemap. After all tasks complete, verify integration: run sqlc generate, go build, pnpm build, full test suite. Fix integration issues. Present complete feature only when everything passes.

From the insights report:

Getting started: Structure audits as multi-pass workflows using TodoWrite (already used 202 times) to track findings, with mandatory verification steps where Claude must cite exact file:line evidence and run code to confirm each issue is real.

Audit this codebase for [bugs/security/performance]. Follow this process: PASS 1 - Discovery: Grep and Read every relevant file. Log potential issues to TODO with file:line refs. PASS 2 - Verification: For EACH finding, re-read context (50+ lines), trace the code path, confirm it's real. Remove findings you cannot verify by reading code. PASS 3 - Prioritization: Rate verified issues by severity with justification. PASS 4 - Self-check: Ask yourself: 'Did I fabricate details? Did I assume without reading?' Remove anything uncertain. Present final verified list with exact citations.

A friend of mine recently attended an open forum panel about how engineering orgs can better support their engineers. The themes that came up were not surprising:

With chatbots and coding agents we've all experienced those moments that make us stop trusting the first answer. From cheerleading to making stuff up to drifting off course. That's why sometimes we ne...

At work someone asked how to rank higher. I said wrong question, first check if you're sabotaging yourself. The most common problem is people make it harder for Google to find and/or understand what t...